DINO

https://arxiv.org/pdf/2104.14294 https://github.com/facebookresearch/dino

Motivation

它是一个Encoder.

The motivation behind DINO (Self-Distillation with No Labels) is to leverage self-supervised learning techniques to improve the performance of vision transformers (ViTs) without the need for labeled data. DINO introduces a novel self-distillation framework that encourages the model to learn from its own predictions, leading to improved feature representations and better generalization.

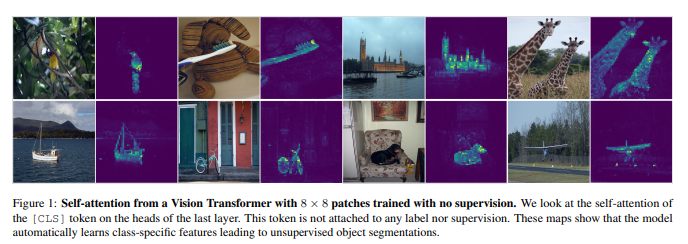

Make the following observations: first, self-supervised ViT features contain explicit information about the semantic segmentation of an image, which does not emerge as clearly with supervised ViTs, nor with convnets. Second, these features are also excellent k-NN classifiers, reaching 78.3% top-1 on ImageNet with a small ViT

Method

训练方式

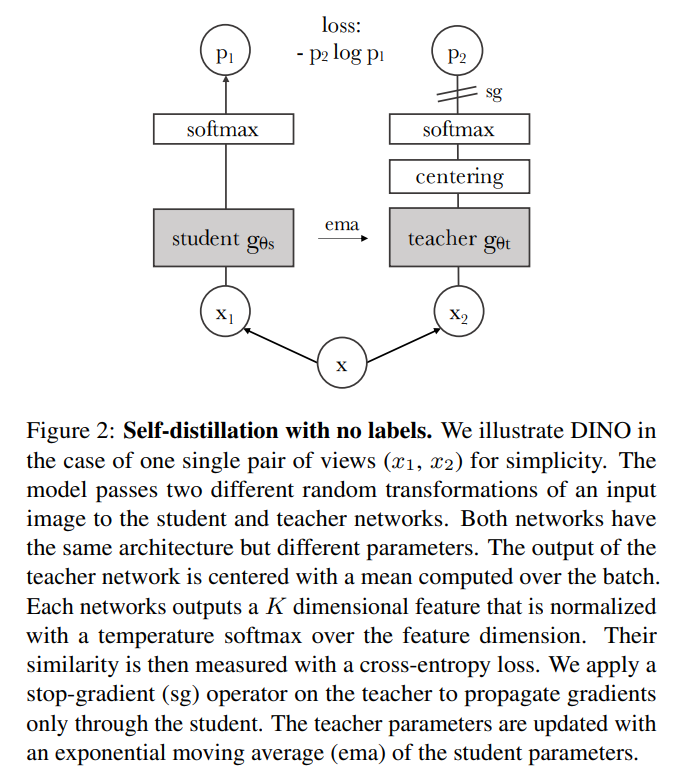

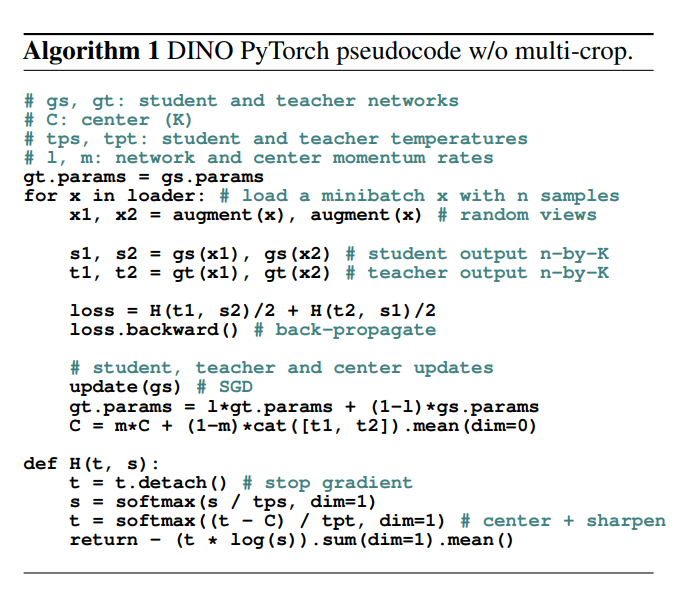

DINO的训练方式基于自蒸馏(Self-Distillation)框架,通过引导模型学习自身的预测来提升特征表示能力。

学生-教师架构:

- 并不是真正的教师-学生关系,而是一种自我监督的学习方式。学生网络和教师网络共享相同的结构,但参数不同。

- 学生网络(Student Network):主要的模型,负责学习图像的特征表示

- 教师网络(Teacher Network):辅助模型,其参数是学生网络参数的指数移动平均(EMA),don’t have a priori knowledge

- The centering operation only depends on firstorder batch statistics and can be interpreted as adding a bias term c to the teacher: gt(x) ← gt(x) + c (Avoiding collapse)

Softmax Distillation

\($ P_s(x)^{(i)} = \frac{\exp\!\left(\tfrac{g_{\theta_s}(x)^{(i)}}{\tau_s}\right)} {\sum_{k=1}^{K} \exp\!\left(\tfrac{g_{\theta_s}(x)^{(k)}}{\tau_s}\right)}\)$

Enjoy Reading This Article?

Here are some more articles you might like to read next: