Deformable DETR

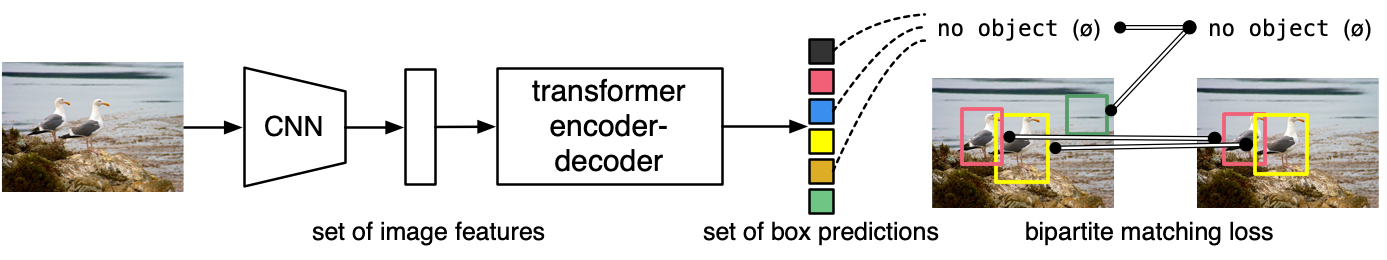

DETR

https://github.com/facebookresearch/detr

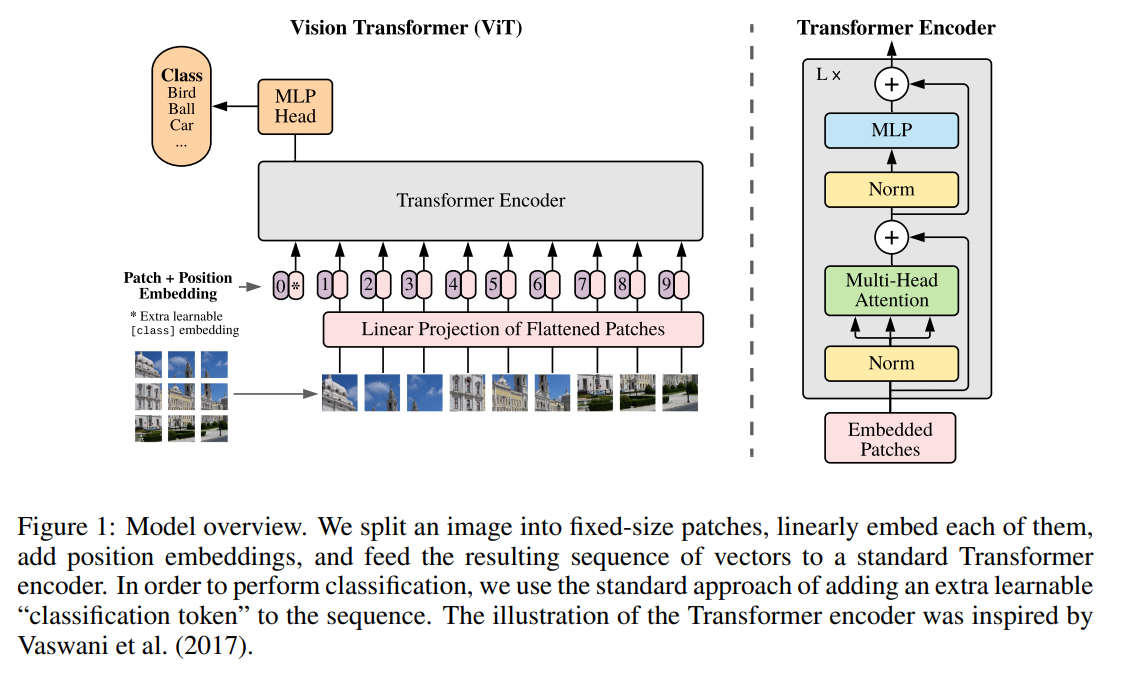

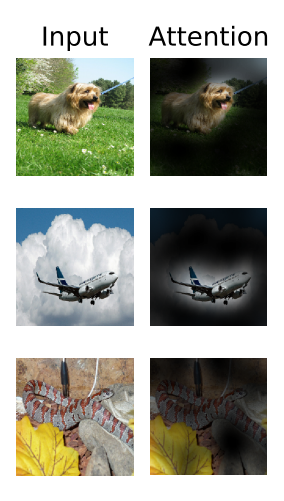

Encoder: ViT

https://arxiv.org/pdf/2010.11929

https://github.com/google-research/vision_transformer

- Linear Projections of Flattened 2D patches

- reshape the image \(x_p \in \mathbb{R}^{H*W*C}\) into \(x_p \in \mathbb{R}^{N \times (P^2 \cdot C)}\)

- (H, W) is the resolution of the original image, C is the number of channels

- (P, P) is the resolution of each image patch

- $N = HW/P^2$ is the resulting number of patches

- reshape the image \(x_p \in \mathbb{R}^{H*W*C}\) into \(x_p \in \mathbb{R}^{N \times (P^2 \cdot C)}\)

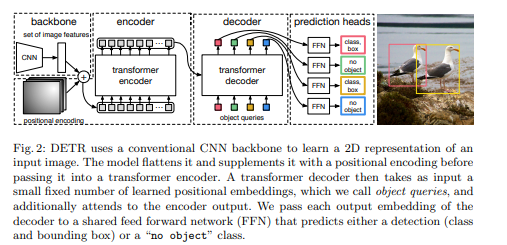

Decoder

https://arxiv.org/pdf/2005.12872

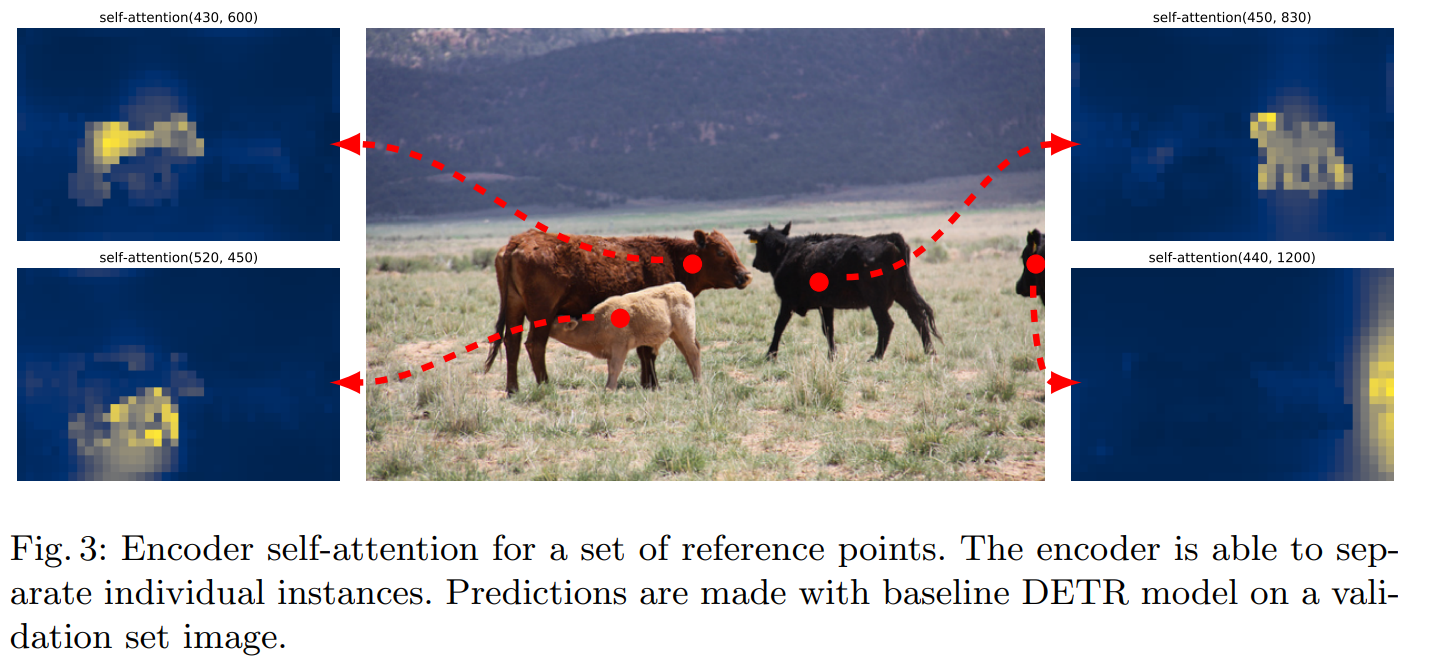

- 核心:Obect queries,学会怎么从原始特征找到具体物体的位置 (Fig. 3)

- FFNs

- predict a fixed-size set of N bounding boxes

- ∅ is used to represent that no object is detected within a slot (background)

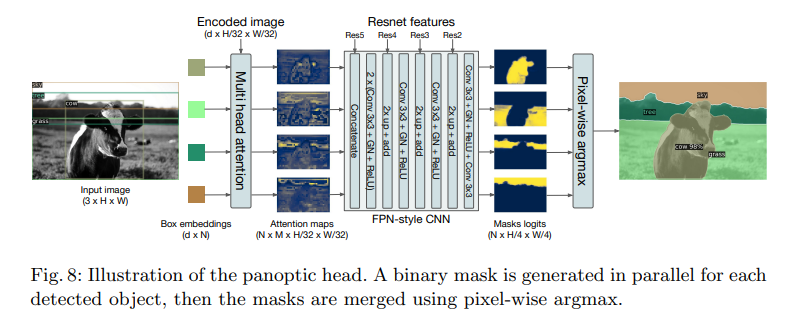

- DETR for panoptic segmentation

- takes as input the output of transformer decoder for each object

- adds a mask head on top of the decoder outputs (Fig. 8)

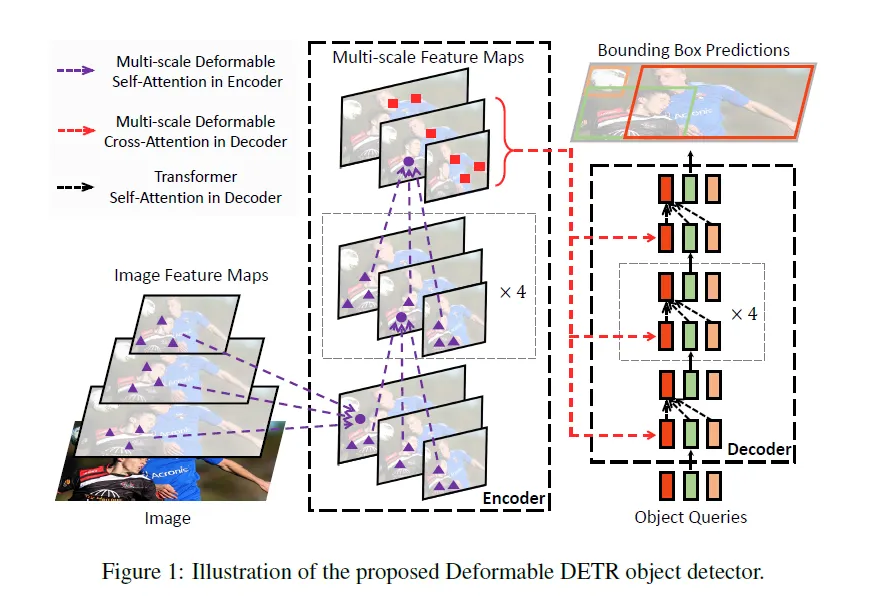

Deformable DETR

https://arxiv.org/pdf/2010.04159

https://github.com/fundamentalvision/Deformable-DETR

Encoder

Key idea

- Attend to sparse spatial locations → fast and efficient

- Deformable convolution + attention → the element relation modeling mechanism

- Multiscale features + 特征对齐 → (multiscale) deformable attention modules

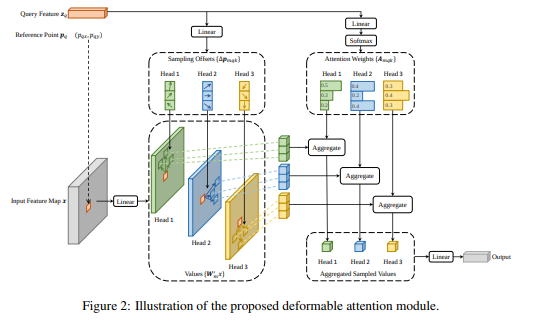

Deformable attention modules

-

mindexes the attention head,kindexes the samped keys - \(x \in \mathbb{R}^{C*H*W}\): is input feature map,

q代表feature map中的某一个点(query), $z_q$ is content feature - Reference point $p_q$:

q的2D位置关系 in feature map (坐标 in feature map) - $\sum_{k=1}^{K}$: 和DETR的所有点相互注意力不同,assigning only a small fixed number of keys for each query

-

Kis the total sampled key number (K < H*W) (for each points in feature map) (K=4 in official repo)

-

- $\Delta p_{mqk}$ denote the sampling offset

- 调整sampling偏移位置

- 找到更有意义的sampling

- 模型预测

- 插值得到具体特征

- 调整sampling偏移位置

-

A是 attention weight

Multi-scale Deformable attention modules

- Multiscale feature maps, ${x^{(l)} }_{l=1}^{L}$ is the input multi-scale feature maps

- $\Delta \mathbf{p}_{mlqk}$不同层级各自sampling不同的偏移量, $\phi_l(\hat{p}_q)$: 偏移量; 不同层级之间对应query对齐

- $\sum_{l=1}^{L}$ 不同层级(multiscale)特征组合(相加)

Decoder

Deformable Transformer Decoder

和DETR Decoder一样,以下是原文

There are cross-attention and self-attention modules in the decoder. The query elements for both types of attention modules are of object queries. In the crossattention modules, object queries extract features from the feature maps, where the key elements are of the output feature maps from the encoder. In the self-attention modules, object queries interact with each other, where the key elements are of the object queries. Since our proposed deformable attention module is designed for processing convolutional feature maps as key elements, we only replace each cross-attention module to be the multi-scale deformable attention module, while leaving the self-attention modules unchanged.

Enjoy Reading This Article?

Here are some more articles you might like to read next: