InstantSplat

https://instantsplat.github.io/

https://arxiv.org/pdf/2403.20309v3

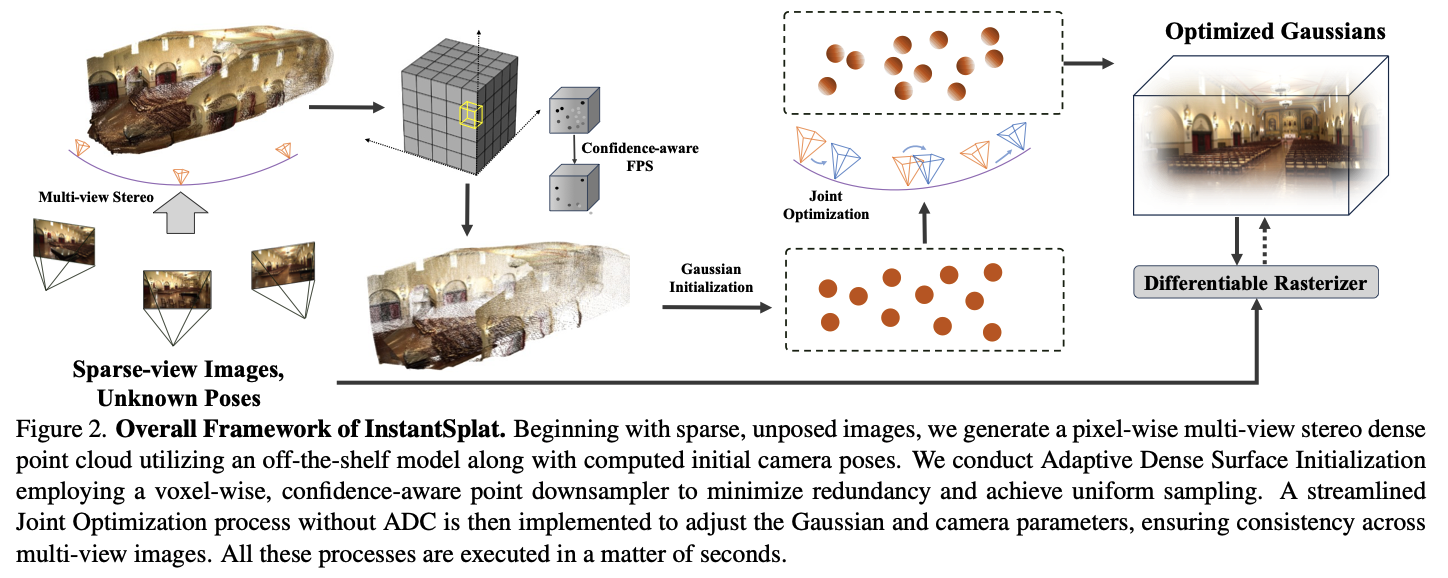

目的: 推理出相机位姿, 高斯椭球G和相机位姿P联调

Recovering Camera Parameters

- Simple optimization problem based on the Weiszfeld algorithm to calculate per-camera focal.

- \[f^{*} = \arg \min_{f} \sum_{i=0}^{W} \sum_{j=0}^{H} O^{i,j}\|(i', j') -f\! \frac{ \left(P^{i,j,0}, P^{i,j,1}\right) }{ P^{i,j,2} }\|\]

- \(\bar{f} = \frac{1}{N} \sum_{i=1}^{N} f^{*}\) propose stabilizing the estimated focal length by averaging across all training views to represent the computed focal length that is utilized in subsequent processes

- Camera Transformation

-

T = [R t] - Can be computed by RANSAC with PnP for each image pair.

-

Scaling pair-wise to Globally Aligned Poses

Using DUSt3R

- first construct a complete connectivity graph $G = (V, \epsilon)$, V 代表单个image所对应的点云, and any image pair \(e = (n, m) \in \epsilon\)

- \(\{ P_i \in \mathbb{R}^{H \times W \times 3} \}_{i=1}^{N}\) 初始点云

- 全局对齐后的点云集合: \(\{ \tilde{P}_i \in \mathbb{R}^{H \times W \times 3} \}_{i=1}^{N}\)

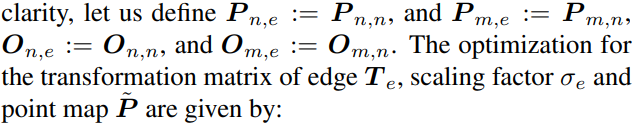

全局对齐

- update the point maps, transformation matrix, and a scale factor

- \[\tilde{P}^{*} = \arg \min_{\tilde{P},\,T,\,\sigma} \sum_{e \in E} \sum_{v \in e} \sum_{i=1}^{HW} O_{i}^{v,e} \, \big\| \tilde{P}^{i}_{v} - \sigma_{e} T_{e} P^{i}_{v,e} \big\|\]

- $T_e$ should align both pointmaps $P_{n,e}$ and $P_{m,e}$ with the world-coordinate pointmaps \(\tilde{P}_{m,e}\)

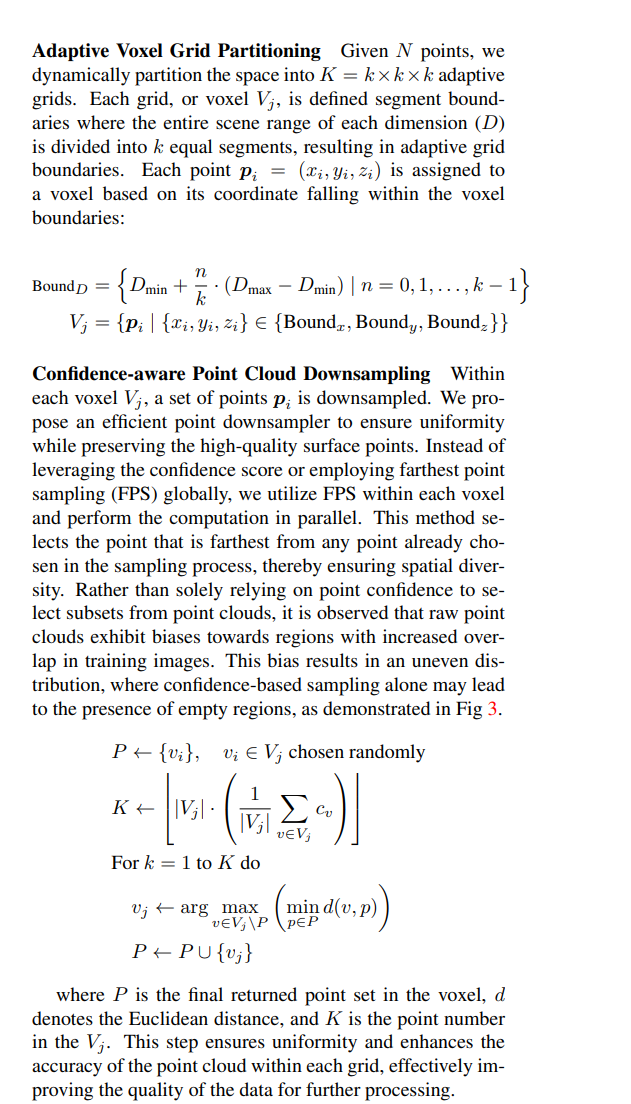

Global Point Initialization

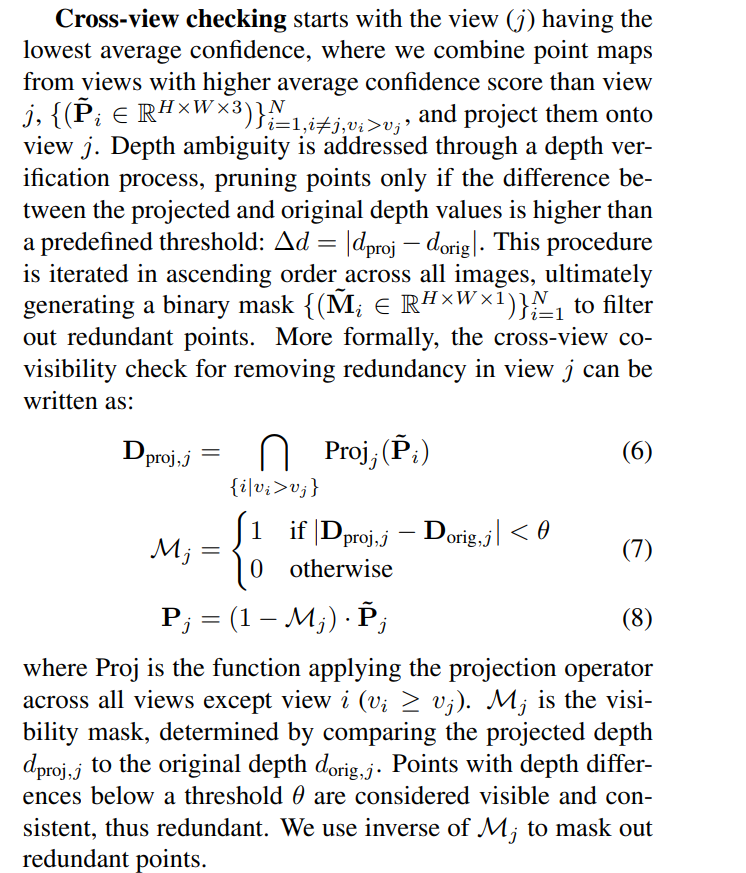

基本上可以说是confidence-based (个人理解),删除或者说mask掉多余重复的点

但作者不同版本给出了两个公式:

arxiv_V6, arxiv_V3

Joint Optimization for Alignment

\[G^*, T^* = \arg \min_{G, T} \sum_{v \in \mathcal{N}} \sum_{i=1}^{HW} \Big( \tilde{C}^{i}_{v}(G, T) - C^{i}_{v}(G, T) \Big)\] ###########################################################

# InstantSplatting

# means3D = pc.get_xyz

rel_w2c = torch.eye(4, device=self._xyz.device)

quaternion = viewpoint_camera.quaternion

rel_w2c[:3, :3] = quaternion_to_matrix(normalize_quaternion(quaternion.unsqueeze(0))).squeeze(0)

rel_w2c[:3, 3] = viewpoint_camera.T

# Transform mean and rot of Gaussians to camera frame

gaussians_xyz = self.get_xyz.clone()

gaussians_rot = self.get_rotation.clone()

# 把相机位姿强行绑定了 从而可微联合优化

xyz_ones = torch.ones(gaussians_xyz.shape[0], 1, dtype=gaussians_xyz.dtype, device=gaussians_xyz.device)

xyz_homo = torch.cat((gaussians_xyz, xyz_ones), dim=1)

gaussians_xyz_trans = (rel_w2c.detach().inverse() @ rel_w2c @ xyz_homo.T).T[:, :3]

gaussians_rot_trans = quaternion_raw_multiply(quaternion.detach() * torch.tensor([1, -1, -1, -1], device=quaternion.device), quaternion_raw_multiply(quaternion, gaussians_rot))

###########################################################

means3D = gaussians_xyz_trans

rotations = gaussians_rot_trans # pc.get_rotation

Enjoy Reading This Article?

Here are some more articles you might like to read next: