Pretrain Diffusion

Using pre-trained diffusion models

Few-shot Adaptation

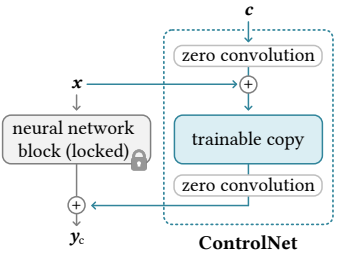

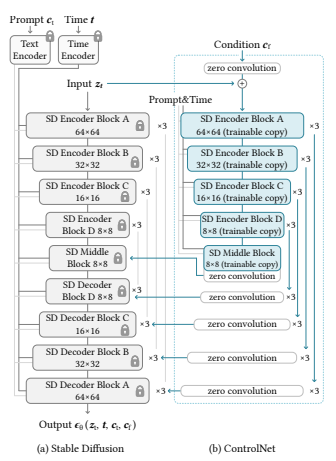

ControlNet[Zhang et al., 2023]:

- Add a few additional learnable parameters

- While keeping the pre-trained network frozen.

- Freeze the noise prediction network.

- For the encoding of the conditional image, copy the pre-trained encoder parameters while allowing them to be updated during fine-tuning

- Combine the encoded conditional image information with the noisy image using zero convolution (1x1 convolution layer) (y = ax + b).

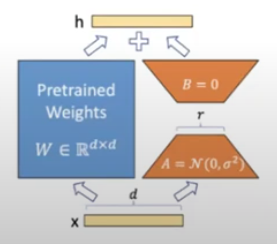

Low-Rank Adaptation (LoRA)

Similar to ControlNet, create an additional branch for each layer that takes the same input and output offset.

Low-Rank Approximation: Equivalent to having two MLP layers with a low dimensional intermediate output.

Zero-Shot Applications

Edit and inpaint images using a pre-trained image diffusion model

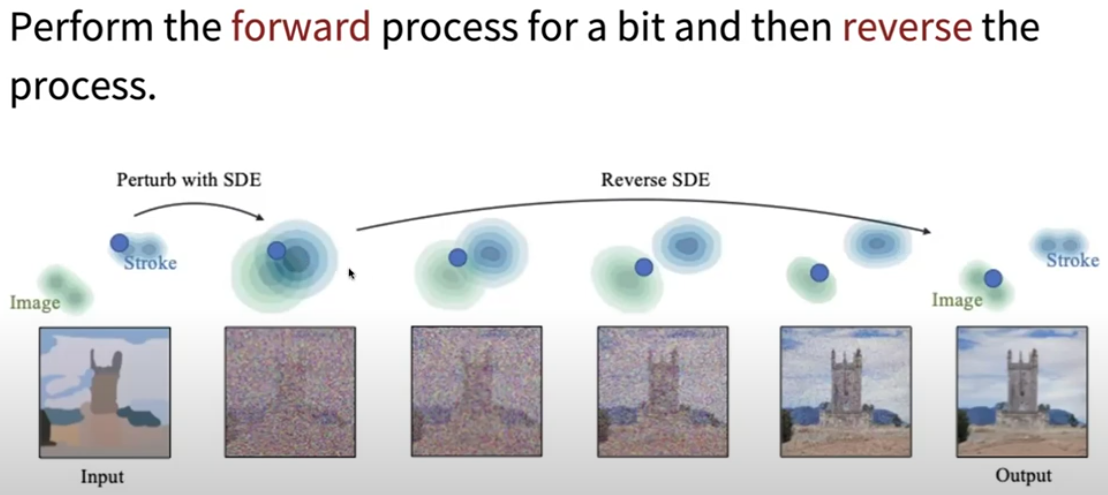

SDEdit [Meng et al., 2022],

- Image generation from sketches

- Image editing from scribbles

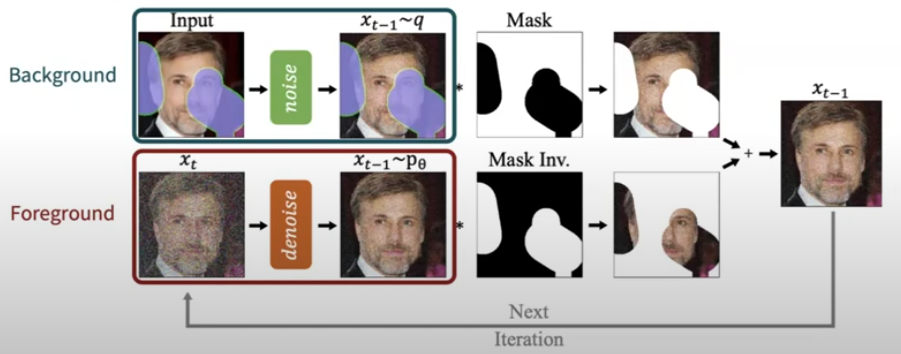

RePaint [Lugmayr et a;., 2022]

Enjoy Reading This Article?

Here are some more articles you might like to read next: